Teachers have always had to deal with cheating but AI has added a whole new twist. One high school English teacher knew their students were leaning on ChatGPT to churn out essays, and they were sick of the denials whenever they called it out. Accusing kids directly was tricky without hard proof, administration might not even back them up.

So instead of fighting AI head-on, this teacher got clever. They set up a creative writing assignment with one hidden trap buried in the directions. Within days, the laziest cheaters walked right into it, exposing themselves in the funniest way possible… and earning a brutal grade that stung worse than a zero.

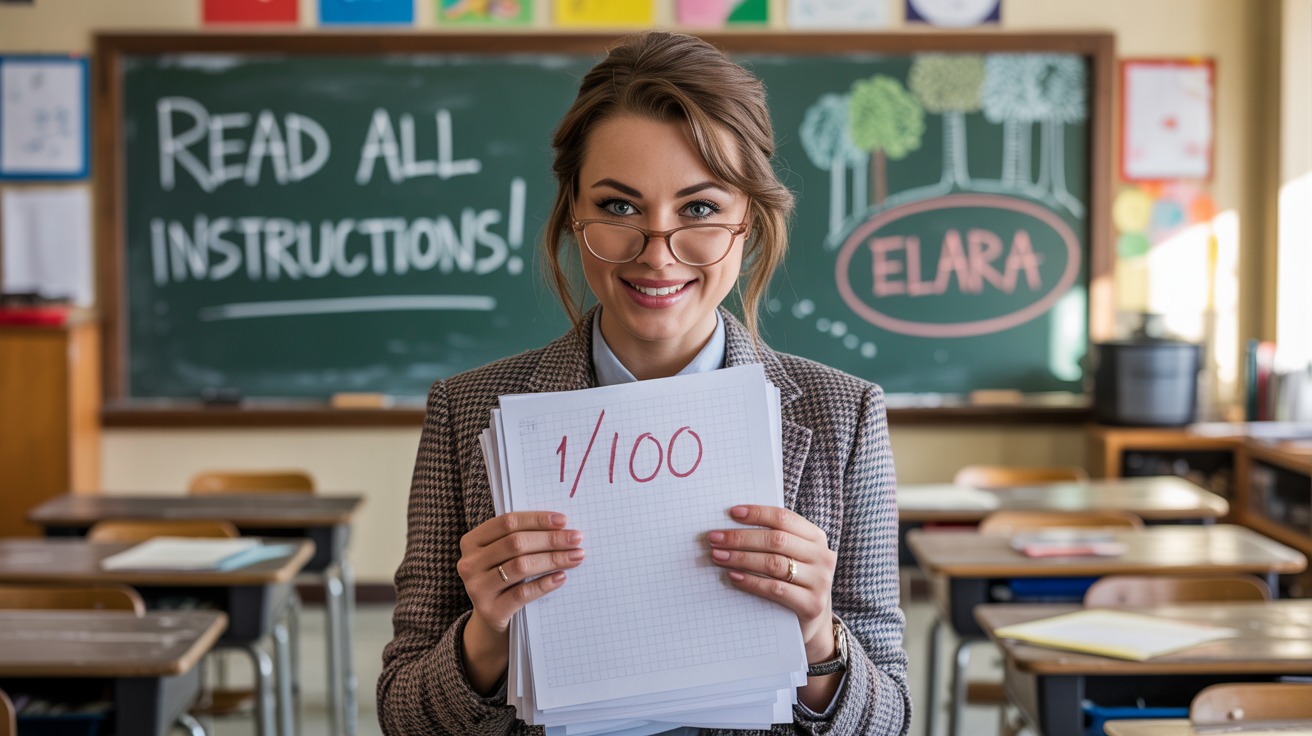

A teacher explained that while students often ignored instructions, the real headache was AI-generated writing

OP later edited the post:

Educators across the globe are grappling with how to handle the rise of AI in classrooms. While it is possible to recognize patterns of AI-generated writing, proving authorship is another matter. Research has shown that AI-detection tools are not reliable enough to be used as the sole basis for academic decisions.

A study by Stanford University highlighted that commonly used AI detectors often mislabel human-written essays, particularly those written by non-native English speakers, as AI-generated. This makes blanket punishments problematic both ethically and legally.

Instead, experts recommend focusing on instruction, assessment design, and academic integrity policies rather than punitive guesswork.

The International Society for Technology in Education (ISTE) suggests that teachers shift toward assignments that require students to show process, such as drafts, outlines, and revisions so that their individual voice and progression become visible. This approach makes it harder for a student to rely solely on AI tools without being detected.

Assessment format also plays a role. According to the American Psychological Association (APA), oral assessments, in-class writing tasks, and personalized prompts tied to class discussions are more resistant to AI misuse because they rely on context only the student would know.

When homework or take-home essays are assigned, requiring students to reflect on their creative process or annotate how they revised their work can help teachers distinguish genuine effort from wholesale AI submissions.

At the same time, some schools are moving toward integration rather than prohibition. The UK Department for Education recently advised educators to treat AI as a tool students must learn to use responsibly, much like calculators or the internet in earlier decades.

This includes teaching critical editing skills, transparency in usage, and requiring students to submit prompts and transcripts alongside their work.

The reality is that suspicion alone cannot form the basis for discipline. Teachers who punish without verifiable evidence risk undermining trust and creating adversarial relationships. Clear classroom policies, fair grading systems, and transparent expectations remain the most effective way forward.

Rather than focusing solely on “catching” misuse, experts stress the importance of guiding students toward understanding when AI can be a helpful supplement and when it undermines their learning.

Here’s what Redditors had to say:

These Reddit users suggested adding hidden instructions in white text so AI would regurgitate absurd details like “a duck, a xylophone, and a hatstand”

Some users reminisced about classic “read all instructions first” pranks, where students ended up putting socks in trees or doodling smiley faces on assignments

This group recalled professors catching cheaters long before AI

Some commenters debated solutions: embrace AI but require logs and edits, or scrap take-home essays altogether in favor of handwritten in-class writing

One user reminded the teacher that they’re really catching lazy ChatGPT users, not all AI use

What started as an everyday headache for one teacher turned into a masterclass in creative problem-solving. By slipping in a single rule, they caught cheaters without uttering the word “AI.” Students learned that shortcuts can backfire, and the internet got a story worth sharing.

So, what do you think? Should teachers embrace AI as part of the writing process, or keep inventing clever traps like the “Elara rule”? And if you were grading, would you give a 0… or that stinging 1/100?